¶ Configuration

¶ ARC size

How to set up ZFS ARC size on Ubuntu/Debian Linux

Proxmox ZFS Performance Tuning

-

Determine needed ARC size:

- For file servers such as CIFS/NFS, set up a large ARC with L2ARC to speed up the operation.

- For MySQL/MariaDB/PostgreSQL, set up a small ARC and tune database caching along with Redis or Memcached.

-

Create a new zfs.conf file:

sudo nano /etc/modprobe.d/zfs.conf# Setting up ZFS ARC size on Ubuntu as per our needs # Set Max ARC size => 2GB == 2147483648 Bytes options zfs zfs_arc_max=2147483648 # Set Min ARC size => 1GB == 1073741824 options zfs zfs_arc_min=1073741824 -

Updates an existing initramfs for Linux kernel:

sudo update-initramfs -u -k all -

Reboot:

sudo reboot -

Verify that the correct ZFS ARC size set on Linux:

cat /sys/module/zfs/parameters/zfs_arc_min cat /sys/module/zfs/parameters/zfs_arc_max -

Finding the arc stats on Linux:

arcstat arc_summary

¶ SWAP on ZFS

-

Create a zvol:

zfs create -V 32G -b $(getconf PAGESIZE) -o compression=zle \ -o logbias=throughput -o sync=standard \ -o primarycache=metadata -o secondarycache=none \ -o com.sun:auto-snapshot=false rpool/swap -

Format the swap device:

mkswap -f /dev/zvol/rpool/swap -

Update /etc/fstab:

echo /dev/zvol/rpool/swap none swap defaults 0 0 >> /etc/fstab -

Enable the swap device:

swapon -av

¶ Create additional ZFS drive on Proxmox

This needs to be done from the node containing the drives. This does not work when logged in on another node in the cluster.

¶ Docker on ZFS

I wanted to run docker inside an LXC container, which might sound weird, but alas, I wanted it. However, running docker in LXC with a ZFS mount was really slow. So I needed to change the setup a bit. It is officially supported though: https://docs.docker.com/storage/storagedriver/zfs-driver/.

Currently it doesn't seem possible to get Docker to work on ZFS directly. Well you can with a lot of manual work, but my experience was really poor.

¶ Option 1: Create LVM Thin pool

LVM on top of linux zfs to use Openstack with nova-volume

How to enable rc.local shell script on systemd while booting Linux system

-

Create a zvol:

zfs create -s -V 32G rpool/lvm-dockerlvm-dockeris the zvol name, can be anything, and 32G is a 32GB size (arbitrary tbh, depends on how many images you'll have and how you manage other container data). To increase this later:zfs set volsize=64G rpool/lvm-docker resize2fs /dev/zvol/rpool/lvm-docker -

Check that it's actually sparse,

volsizeshould be 32GB (that's the max it can take),referencedis how much is actually used (should be very little when it's just created):zfs get volsize,referenced rpool/lvm-docker -

Find the file location (the first command will return f.e.

/dev/zd256, which might change after reboot!!) and create a device (if you getfailed to set up loop device: Device or resource busytry incrementing toloop3,loop4, etc.):file /dev/zvol/rpool/lvm-docker losetup /dev/loop2 /dev/zd256 -

Format the device:

fdisk /dev/loop2and hit:

n,p,1,ENTER,ENTER,t,8e,w -

Create a physical and logical volume

vg-docker:pvcreate /dev/loop2 vgcreate vg-docker /dev/loop2 -

Create a logical volume

lv-dockerof typethin-poolin the volume group just created:lvcreate -n lv-docker --type thin-pool -l 100%FREE vg-docker -

Make the device mapping permanent to survive reboots:

nano /etc/rc.localPaste:

#!/bin/sh losetup /dev/loop2 /dev/zd256 exit 0chmod +x /etc/rc.local systemctl enable rc-local.service --now -

In Proxmox --> Datacenter --> Storage add

LVM-Thinstorage:- ID:

proxmox-lvm-docker - Volume group:

vg-docker - Thin Pool:

lv-docker - Content:

Container

- ID:

-

Add mountpoint into lxc:

-

Through config file by adding this into

/etc/pve/lxc/<vmid>.conf:nano /etc/pve/lxc/<vmid>.confmpX: /mnt/docker, mp=/var/lib/docker, backup=0where

Xis the number for your mountpoint (in case there are others already present) -

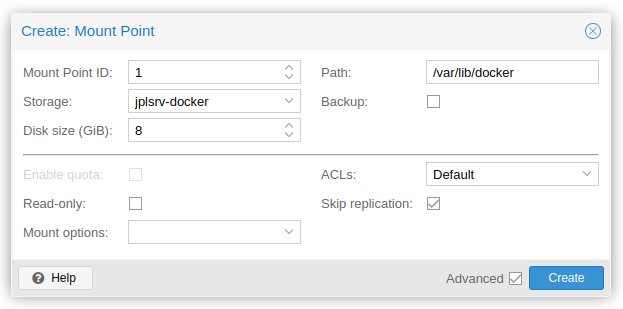

Or using the GUI: select LXC --> Add --> Mount Point:

- Storage:

proxmox-lvm-docker- Disk size (GiB):

8 - Path:

/var/lib/docker

- Disk size (GiB):

- Storage:

-

¶ Option 2: Create EXT4 partition on ZFS

-

Create a zvol:

zfs create -s -V 32G rpool/dockerdockeris the zvol name, can be anything, and 32G is a 32GB size (arbitrary tbh, depends on how many images you'll have and how you manage other container data). To increase this later:zfs set volsize=64G rpool/docker resize2fs /dev/zvol/rpool/docker -

Check that it's actually sparse,

volsizeshould be 32GB (that's the max it can take),referencedis how much is actually used (should be very little when it's just created):zfs get volsize,referenced rpool/docker -

Format it as ext4:

mkfs.ext4 /dev/zvol/rpool/docker -

Mount it into a temp location to change permissions (as mentioned in one of the replies):

mkdir /tmp/zvol_tmp mount /dev/zvol/rpool/docker /tmp/zvol_tmp chown -R 100000:100000 /tmp/zvol_tmp umount /tmp/zvol_tmp -

Add a mount point:

- Create directory

/mnt/docker:mkdir /mnt/docker - Open

/etc/fstab:nano /etc/fstab - Add lines:

# docker volume /dev/zvol/rpool/docker /mnt/docker ext4 defaults 0 1 - Mount volume:

mount -a

- Create directory

-

In Proxmox --> Datacenter --> Storage add

Directorystorage:- ID:

proxmox-rpool-docker - Directory:

/mnt/docker - Content:

Container

- ID:

-

Add mountpoint into lxc:

-

Through config file by adding this into

/etc/pve/lxc/<vmid>.conf:nano /etc/pve/lxc/<vmid>.confmpX: /mnt/docker, mp=/var/lib/docker, backup=0where

Xis the number for your mountpoint (in case there are others already present) -

Or using the GUI: select LXC --> Add --> Mount Point:

- Storage:

proxmox-rpool-docker- Disk size (GiB):

8 - Path:

/var/lib/docker

- Disk size (GiB):

- Storage:

-

¶ Install docker

-

SSH into the container.

¶ Commands

¶ Get zfs disk/pool info

sudo zdb

¶ Get compression setting

sudo zfs get compression <POOL>

¶ Set compression setting

sudo zfs set compression=lz4 <POOL>

¶ Get atime setting

sudo zfs get atime <POOL>

¶ Set atime setting

sudo zfs set atime=disabled <POOL>

¶ Get sync setting

sudo zfs get sync <POOL>

sudo zfs get sync <POOL>/<DATASET>

¶ Set sync setting

sudo zfs set sync=disabled <POOL>

sudo zfs set sync=disabled <POOL>/<DATASET>

¶ Resilver pfSense boot pool

https://forum.netgate.com/topic/112490/how-to-2-4-0-zfs-install-ram-disk-hot-spare-snapshot-resilver-root-drive/2

https://www.reddit.com/r/PFSENSE/comments/gceeci/zfs_mirror_recovery_process/

¶ Get pool status

zpool status

¶ Offline failing disk

Change

<x>for the actual drive number in all commands below

sudo zpool offline da<x>p4

¶ Insert new disk and create partition table

¶ Get the current table

The first command shows the partition labels in column "GPT".

gpart show -l

=> 40 585937424 da1 GPT (279G)

40 409600 1 efiboot1 (200M)

409640 1024 2 gptboot1 (512K)

410664 984 - free - (492K)

411648 8388608 3 swap1 (4.0G)

8800256 577136640 4 zfs1 (275G)

585936896 568 - free - (284K)

The second command shows the partition type in column "GPT".

gpart show

=> 40 585937424 da1 GPT (279G)

40 409600 1 efi (200M)

409640 1024 2 freebsd-boot (512K)

410664 984 - free - (492K)

411648 8388608 3 freebsd-swap (4.0G)

8800256 577136640 4 freebsd-zfs (275G)

585936896 568 - free - (284K)

¶ Create table

sudo gpart create -s gpt da<x>

sudo gpart add -a 4k -s 200M -t efi -l efiboot<x> da<x>

sudo gpart add -b 409640 -s 512k -t freebsd-boot -l gptboot<x> da<x>

sudo gpart add -b 411648 -s 4G -t freebsd-swap -l swap<x> da<x>

sudo gpart add -b 8800256 -s 275G -t freebsd-zfs -l zfs<x> da<x>

sudo gpart bootcode -b /boot/pmbr -p /boot/gptzfsboot -i 2 da<x>

¶ Get guid of zpool member

zdb

¶ Replace disk in pool

sudo zpool replace pfSense <guid> da<x>p4